APISCRAPY’s Smart Site Crawler to Bypass Web-Blocking & Anti-Scraping

Estimated Reading Time 9 min

AIMLEAP Automation Works Startups | Digital | Innovation| Transformation

Data is one of the most valuable resources available today. “Data is the new oil,” you’ve probably heard. In fact, data has the potential to be far more valuable and versatile than oil ever could be. The most basic and fundamental use of data is to make informed decisions. It helps us perceive the world around us, allowing us to make better decisions and achieve our objectives. Researchers, marketers, and analysts use a site crawler to collect data from the World Wide Web for market monitoring, trend analysis, and research studies and findings.

Many businesses still romanticize intuitions and gut feelings in today’s data-driven world. Though intuition can be useful, basing all decisions on gut feelings is not a good idea. While intuition might give a hunch or spark that leads you down a specific road, data allows you to check, interpret, and justify your findings.

According to a PwC survey of over 1,000 senior executives, data-driven companies are three times more likely to experience major gains in decision-making than those that depend less on data.

You can get data in a variety of formats and from a variety of sources using site crawler software. Data, like oil, is refined before it can be used by an advanced tool. A site crawler collects high-quality data in large quantities from sites quite efficiently by bypassing several anti-scraping technologies. Today, many websites employ various anti-scraping technologies and techniques to block your access to their data.

Anti-Scraping Technology! What’s That?

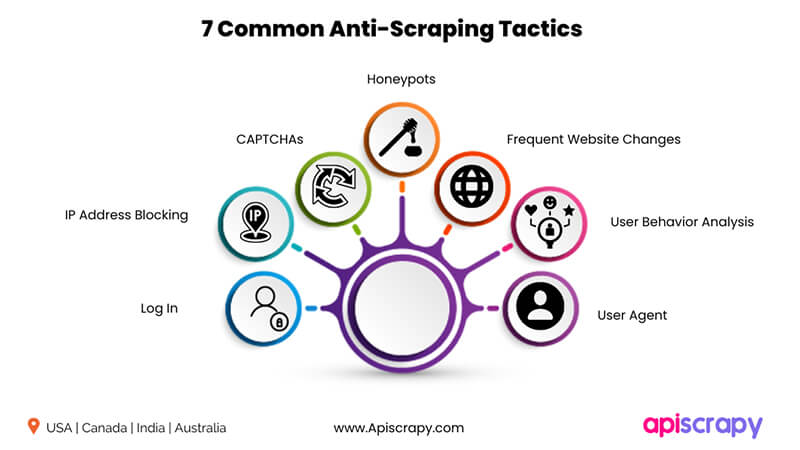

As a growing company, you must target well-known and well-established websites. To prevent you from collecting data, these sites put an iron shield of anti-scraping technologies. Anti-scraping measures make it more difficult to collect data from a web page automatically. Anti-scraping is all about identifying and preventing requests from bots or malicious users. Let’s look at the seven most widely used anti-web scraping tactics and how APISCRAPY’s free web crawler deceives them.

1. Log in

Most websites, such as LinkedIn or Instagram, keep their data behind an authentication/login wall. This is especially the case when you crawl social media sites like Twitter and Facebook for data. Only authorized users can access data on a website with a login wall.

The site crawler you choose from APISCRAPY imitates the logging procedures to crawl sites that need login. The crawler saves the cookies after login into the website. A cookie is a little piece of data that stores user surfing data. Without cookies, the website would forget that you had previously checked in and prompt you to login again.

Furthermore, certain websites with smart anti-scraping techniques can only provide limited access to data, such as 1000 lines of data every day, even after logging in. Our free web crawler can easily break the login wall on websites and scrape data in large quantities.

2. IP Address Blocking

One of the most basic anti-scraping techniques employed by website owners is restricting and blocking IP addresses. The website keeps detailed records of the inquiries it gets. The website can determine whether the IP is a robot based on the activity.

Making many web visits in a matter of seconds! A real human could never navigate a site so quickly. When a website discovers that a large number of requests have been received from a single IP address on a regular or irregular basis, the IP address is likely to be blacklisted since it is suspected of being a bot. Blocking IP addresses can be successful, but it is only a temporary solution.

APISCRAPY’s free site crawler has access to 100+ million IP proxies that are used to crawl a site multiple times for data collection without any blockages. Its large proxy pool for scraping helps the crawler bypass IP blockages on the target website by issuing access requests from other IP addresses.

3. CAPTCHAs

To ascertain if a user is human, a challenge-response test called a CAPTCHA is utilized by online platforms. Finding a solution to a challenge that only a human being can solve is required for CAPTCHAs. For example, they can ask you to choose pictures of a specific animal or object.

One of the most widely used anti-bot defense mechanisms is the CAPTCHA. That is especially true in light of the fact that several CDN (Cloud Delivery Network) providers now include them as integrated anti-bot defenses.

A report finds that 16% of the top 50 grossing eCommerce sites employ CAPTCHAs for this purpose.

In eCommerce, CAPTCHAs are mostly utilized as an additional security measure during password reset or on many sign-in attempts.

CAPTCHAs block access to and browsing of a website by automated systems that are not human. Therefore, CAPTCHAs stop scrapers from recursively crawling a page. However, there are means of automatically getting through them. APISCRAPY’s site crawler can easily bypass CAPTCHAs and solve your problem of delayed data collection. Offering a free web crawler that solves CAPTCHAs is hard to find, and we are making it possible.

4. Honeypots

As the name implies, a honeypot is a “trap” used to trick computer programmes and bots into unintentionally disclosing their identities. It is a ruse designed to appear to be a genuine system.

The goal is to offer something that will draw the bot—the “honey”—but is inaccessible or concealed to authorized human users. Their objectives are to divert malicious users and bots from real targets. These honeypots also allow security systems to observe how attackers behave.

In terms of anti-scraping, a honeypot can be a fake website or online platform. These honeypots frequently output inaccurate information. Additionally, it could gather information from the requests it gets to train the anti-scraping algorithms. They can track your requests using a fingerprint before completely blocking them.

The only way to avoid a honeypot trap is by using our site crawler with cutting-edge features. Our advanced free site crawler can identify traps and collect data with high efficiency.

5. Frequent Website Changes

To secure their data, several websites change their HTML markups every month or a week. A scraping bot will hunt for information in the same locations previously found. Websites aim to mislead the scraping tool by altering the pattern of their HTML, making it more difficult to obtain the needed data.

Furthermore, programmers can obscure the code. HTML obfuscation is making the code significantly more difficult to understand while keeping it fully functioning. The information is still present, but it is expressed in an excessively convoluted manner.

The site crawler from APISCRAPY can tackle frequent website changes and collect data effortlessly.

6. User Behavior Analysis

Rather than evaluating and responding to client requests in real-time, websites might gather user behavior data over time and respond only when sufficient information is available. This information includes the sequence in which sites are browsed, how long the user remains on each page, mouse movements, and even how quickly forms are filled out.

A real human does not repeat the same behavioral patterns. Some websites monitor request frequency, and if the requests are sent in the same pattern regularly, such as once per second, the anti-scraping system is extremely likely to be engaged. Websites can restrict the bot if there is enough proof that the user’s activity is not human.

With APISCRAPY’s site crawler, you can easily spoof these types of anti-scraping measures and extract data of high quality. The site crawler software is advanced enough to evade any highly advanced anti-scraping technology and streamline your data scraping process.

7. User Agent

Anti-scraping methods, like IP-based blocking, can employ HTTP headers to identify and prevent malicious requests. Again, the website records the latest requests received. It blocks them if they do not include an acceptable set of values in some HTTP header.

In particular, the most important header to consider is the User-Agent header. This string indicates the programme, operating system, and/or vendor version that generated the HTTP request. As a result, your site crawler should always provide a valid User-Agent header.

Similarly, the anti-scraping system may block requests without a Referrer header. This HTTP header is a string containing the absolute or partial address of the web page from which the request is made.

Conclusion

Anti-scraping techniques are frustrating when collecting data from web sources. All the anti-scraping techniques and technologies listed above are implemented for site crawlers because they might cause the website to slow down for regular visitors and, in the worst-case scenario, result in a denial of service.

APISCRAPY’s well-researched, smart, cutting-edge free web crawler can efficiently handle all types of anti-scraping technologies. Thousands of web pages can be crawled for data collection using our data scraping tool without any interruption. It is intended to remove all the hurdles that appear during data collection to deliver a smoother user experience.

AIMLEAP Automation Practice

APISCRAPY is a scalable data scraping (web & app) and automation platform that converts any data into ready-to-use data API. The platform is capable to extract data from websites, process data, automate workflows and integrate ready to consume data into database or deliver data in any desired format. APISCRAPY practice provides capabilities that help create highly personalized digital experiences, products and services. Our RPA solutions help customers with insights from data for decision-making, improve operations efficiencies and reduce costs. To learn more, visit us www.apiscrapy.com

Related Articles

How to scrape indeed? Step-by-Step Guide

How to scrape indeed? Step-by-Step Guide GET A FREE QUOTE Expert Panel AIMLEAP Center Of Excellence AIMLEAP Automation Works Startups | Digital | Innovation| Transformation Author Jyothish Estimated Reading Time 9 min AIMLEAP Automation Works Startups | Digital |...

Mastering Real Estate Data – The Ultimate Guide to APISCRAPY’s Free Zillow Scraper

Mastering Real Estate Data – The Ultimate Guide to APISCRAPY’s Free Zillow Scraper GET A FREE QUOTE Expert Panel AIMLEAP Center Of Excellence AIMLEAP Automation Works Startups | Digital | Innovation| Transformation Author Jyothish Estimated Reading Time 9 min AIMLEAP...

10X Faster Web Data Extraction using AI Website Scraper

10X Faster Web Data Extraction using AI Website Scraper Expert Panel AIMLEAP Center Of Excellence AIMLEAP Automation Works Startups | Digital | Innovation| Transformation Author Jyothish AIMLEAP Automation Works Startups | Digital | Innovation| Transformation GET A...